API and Documentation

Model

A probabilistic model is a joint distribution \(p(\mathbf{x}, \mathbf{z})\) of data \(\mathbf{x}\) and latent variables \(\mathbf{z}\). For background, see the Probabilistic Models tutorial.

In Edward, we specify models using a simple language of random variables. A random variable \(\mathbf{x}\) is an object parameterized by tensors \(\theta^*\), where the number of random variables in one object is determined by the dimensions of its parameters.

from edward.models import Normal, Exponential

# univariate normal

Normal(loc=tf.constant(0.0), scale=tf.constant(1.0))

# vector of 5 univariate normals

Normal(loc=tf.zeros(5), scale=tf.ones(5))

# 2 x 3 matrix of Exponentials

Exponential(rate=tf.ones([2, 3]))For multivariate distributions, the multivariate dimension is the innermost (right-most) dimension of the parameters.

from edward.models import Dirichlet, MultivariateNormalTriL

# K-dimensional Dirichlet

Dirichlet(concentration=tf.constant([0.1] * K))

# vector of 5 K-dimensional multivariate normals with lower triangular cov

MultivariateNormalTriL(loc=tf.zeros([5, K]), scale_tril=tf.ones([5, K, K]))

# 2 x 5 matrix of K-dimensional multivariate normals

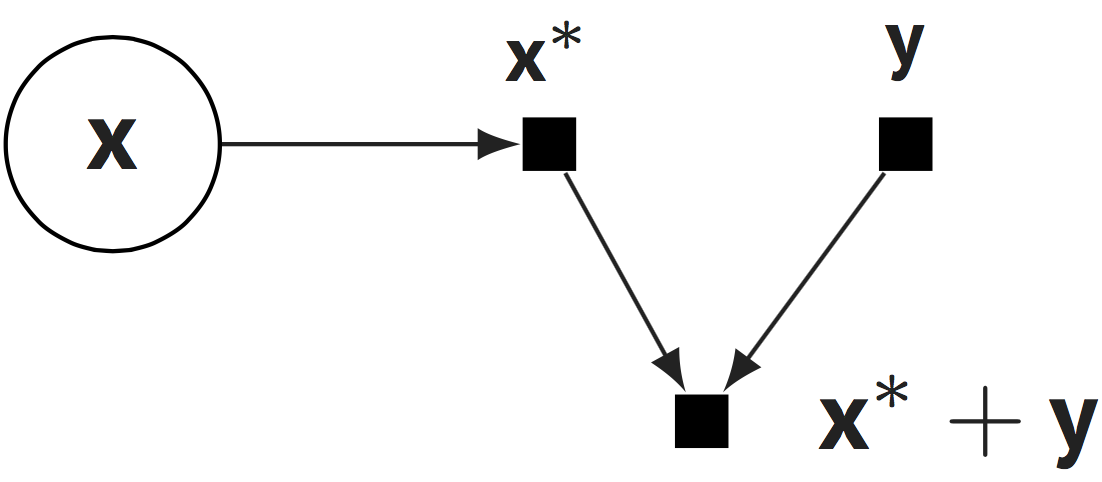

MultivariateNormalTriL(loc=tf.zeros([2, 5, K]), scale_tril=tf.ones([2, 5, K, K]))Random variables are equipped with methods such as log_prob(), \(\log p(\mathbf{x}\mid\theta^*)\), mean(), \(\mathbb{E}_{p(\mathbf{x}\mid\theta^*)}[\mathbf{x}]\), and sample(), \(\mathbf{x}^*\sim p(\mathbf{x}\mid\theta^*)\). Further, each random variable is associated to a tensor \(\mathbf{x}^*\) in the computational graph, which represents a single sample \(\mathbf{x}^*\sim

p(\mathbf{x}\mid\theta^*)\).

This makes it easy to parameterize random variables with complex deterministic structure, such as with deep neural networks, a diverse set of math operations, and compatibility with third party libraries which also build on TensorFlow. The design also enables compositions of random variables to capture complex stochastic structure. They operate on \(\mathbf{x}^*\).

from edward.models import Normal

x = Normal(loc=tf.zeros(10), scale=tf.ones(10))

y = tf.constant(5.0)

x + y, x - y, x * y, x / y

tf.tanh(x * y)

x[2] # 3rd normal rv in the vectorIn the compositionality page, we describe how to build models by composing random variables.

ed.models.RandomVariableed.models.Autoregressiveed.models.Bernoullied.models.BernoulliWithSigmoidProbsed.models.Betaed.models.BetaWithSoftplusConcentrationed.models.Binomialed.models.Categoricaled.models.Cauchyed.models.Chi2ed.models.Chi2WithAbsDfed.models.ConditionalDistributioned.models.ConditionalTransformedDistributioned.models.Deterministiced.models.Dirichleted.models.DirichletMultinomialed.models.DirichletProcessed.models.Empiricaled.models.ExpRelaxedOneHotCategoricaled.models.Exponentialed.models.ExponentialWithSoftplusRateed.models.Gammaed.models.GammaWithSoftplusConcentrationRateed.models.Geometriced.models.HalfNormaled.models.Independented.models.InverseGammaed.models.InverseGammaWithSoftplusConcentrationRateed.models.Laplaceed.models.LaplaceWithSoftplusScaleed.models.Logisticed.models.Mixtureed.models.MixtureSameFamilyed.models.Multinomialed.models.MultivariateNormalDiaged.models.MultivariateNormalDiagPlusLowRanked.models.MultivariateNormalDiagWithSoftplusScaleed.models.MultivariateNormalFullCovarianceed.models.MultivariateNormalTriLed.models.NegativeBinomialed.models.Normaled.models.NormalWithSoftplusScaleed.models.OneHotCategoricaled.models.ParamMixtureed.models.PointMassed.models.Poissoned.models.PoissonLogNormalQuadratureCompounded.models.QuantizedDistributioned.models.RelaxedBernoullied.models.RelaxedOneHotCategoricaled.models.SinhArcsinhed.models.StudentTed.models.StudentTWithAbsDfSoftplusScaleed.models.TransformedDistributioned.models.Uniformed.models.VectorDeterministiced.models.VectorDiffeomixtureed.models.VectorExponentialDiaged.models.VectorLaplaceDiaged.models.VectorSinhArcsinhDiaged.models.WishartCholeskyed.models.WishartFull